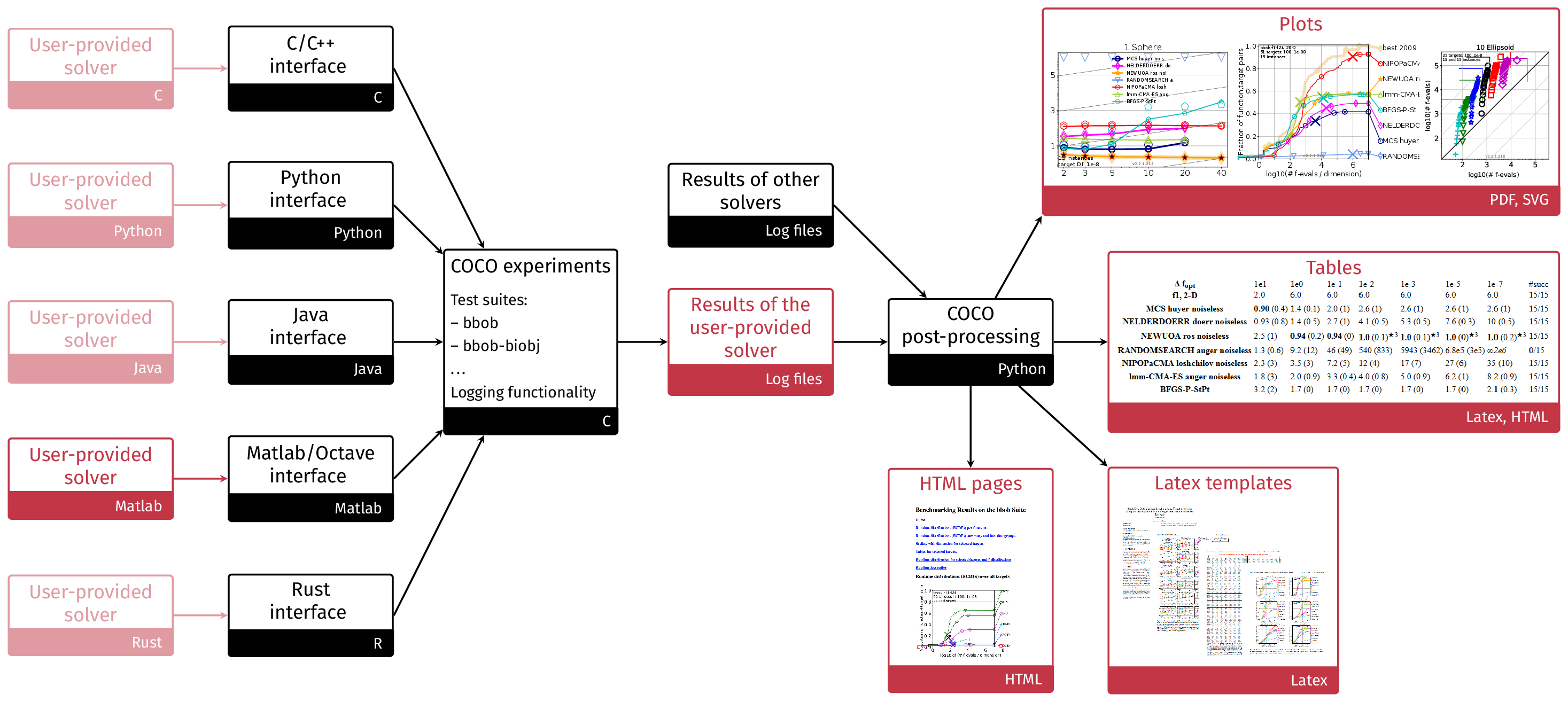

COCO: COmparing Continuous Optimizers

See holder/tabs to the left or the top right to navigate the page content.

Related links

- New documentation pages for COCO (replacing all this here)

- Code web page on Github (for how to run experiments)

- Data archive of all officially registered benchmark experiments (also accessible via the postprocessing module)

- Postprocessed data of these archives for browsing

- How to submit a data set

- How to create and use COCO data archives with the cocopp.archiving Python module

- Get news about COCO by registering here

- To visit the old COCO webpage, see the Internet Archive

Citation

You may cite this work in a scientific context as

N. Hansen, A. Auger, R. Ros, O. Mersmann, T. Tušar, D. Brockhoff. COCO: A Platform for Comparing Continuous Optimizers in a Black-Box Setting, Optimization Methods and Software, 36(1), pp. 114-144, 2021. [pdf, arXiv]

@ARTICLE{hansen2021coco,

author = {Hansen, N. and Auger, A. and Ros, R. and Mersmann, O. and Tu{\v s}ar, T. and Brockhoff, D.},

title = {{COCO}: A Platform for Comparing Continuous Optimizers in a Black-Box Setting},

journal = {Optimization Methods and Software},

doi = {https://doi.org/10.1080/10556788.2020.1808977},

pages = {114--144},

issue = {1},

volume = {36},

year = 2021

}